A code for online classification of videos and images. PATHOS puts frames around faces and classifies the facials expressions using a CNN. The CNN was trained on the Affectnet dataset.

During the summer of 2019 I worked on a DNN implementation in order to classify a person's face in seven expression categories for the psychology dpt. The goal was to collect time-series of people's attitude in videos where patients meet doctors. The code is named PATHOS (short of Programmed Algorithm for Tracking Hyperactive Outbursts of Silliness ) and is available on my github.

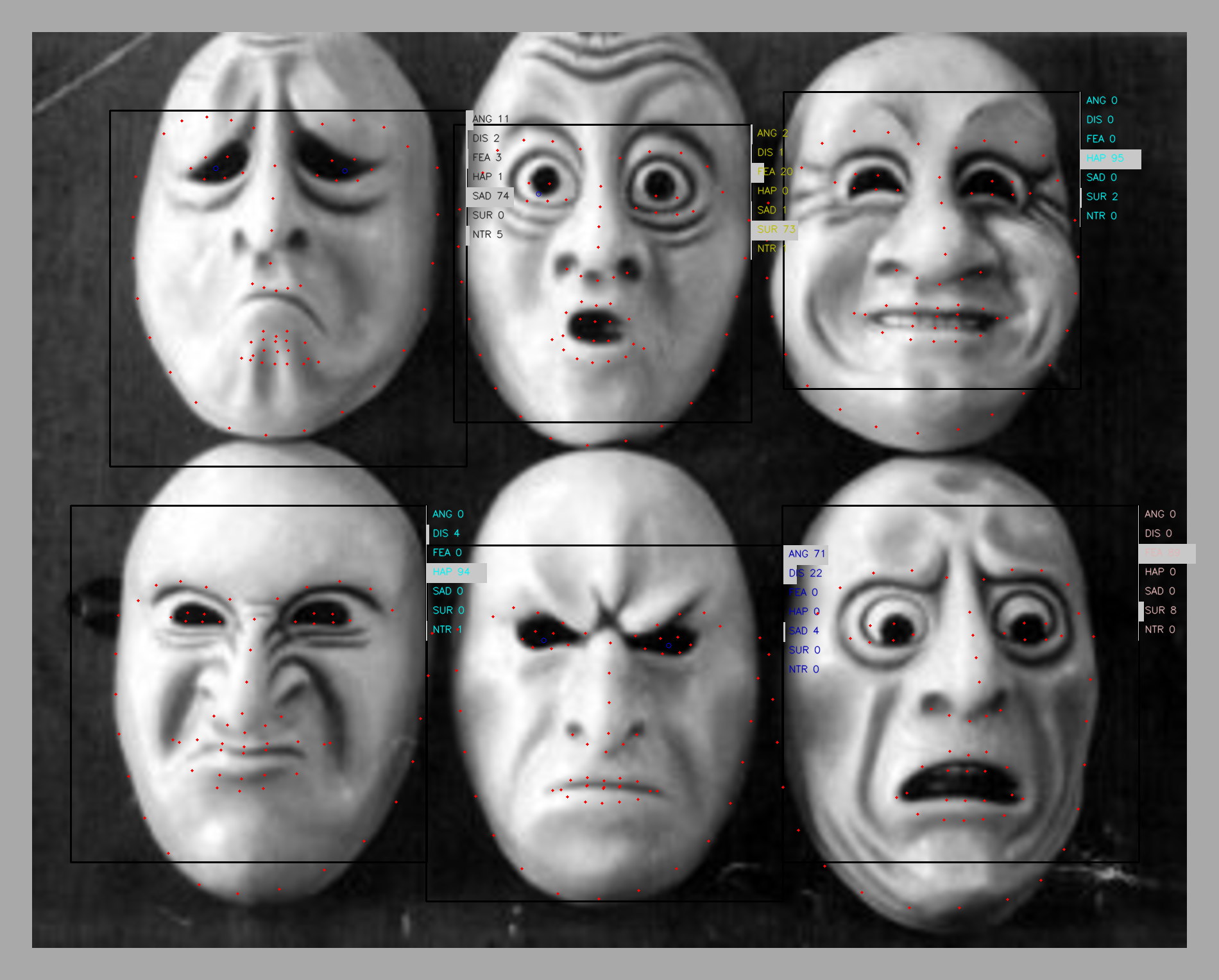

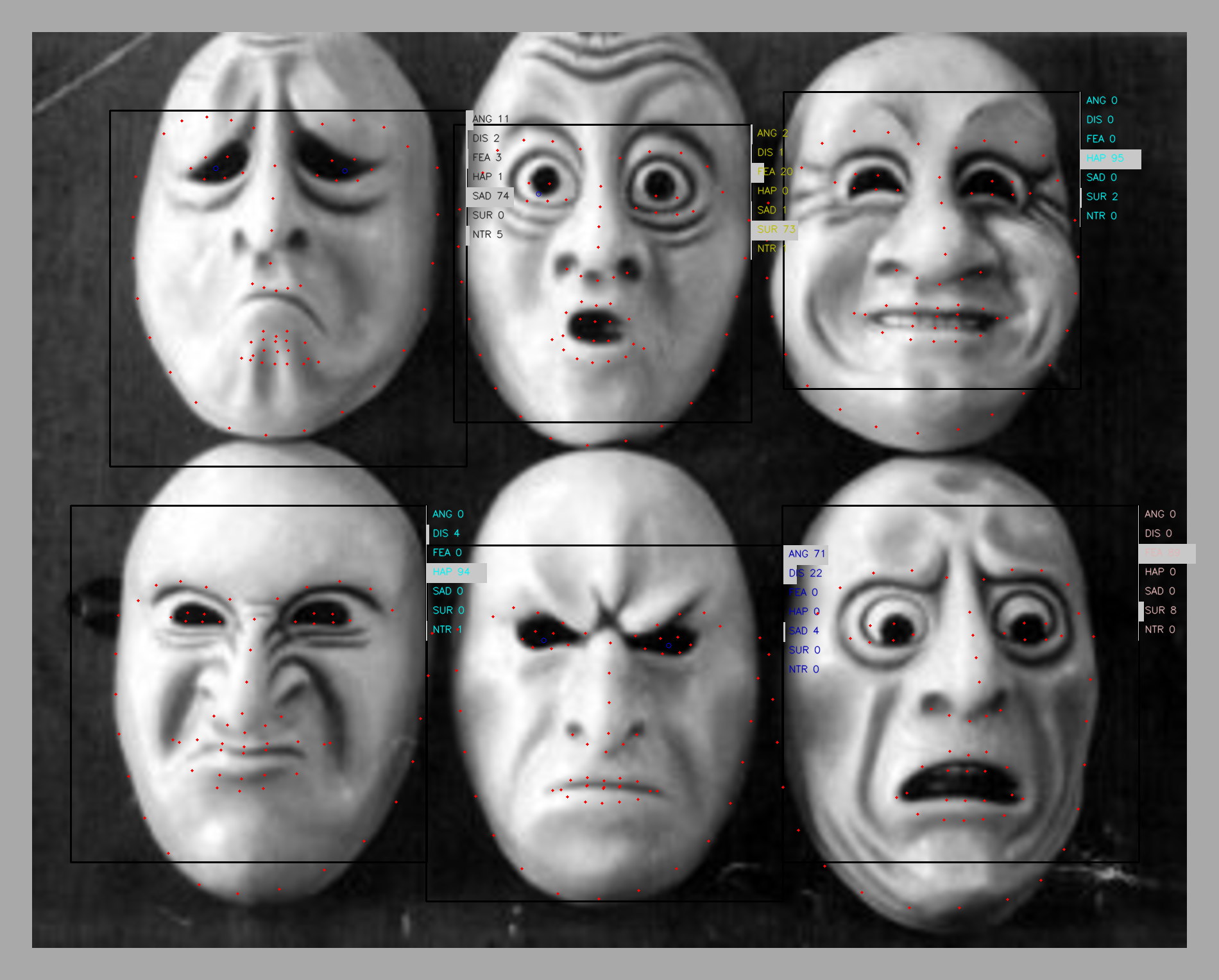

The expression classes included are nearly all represented in this image that was processed by PATHOS.

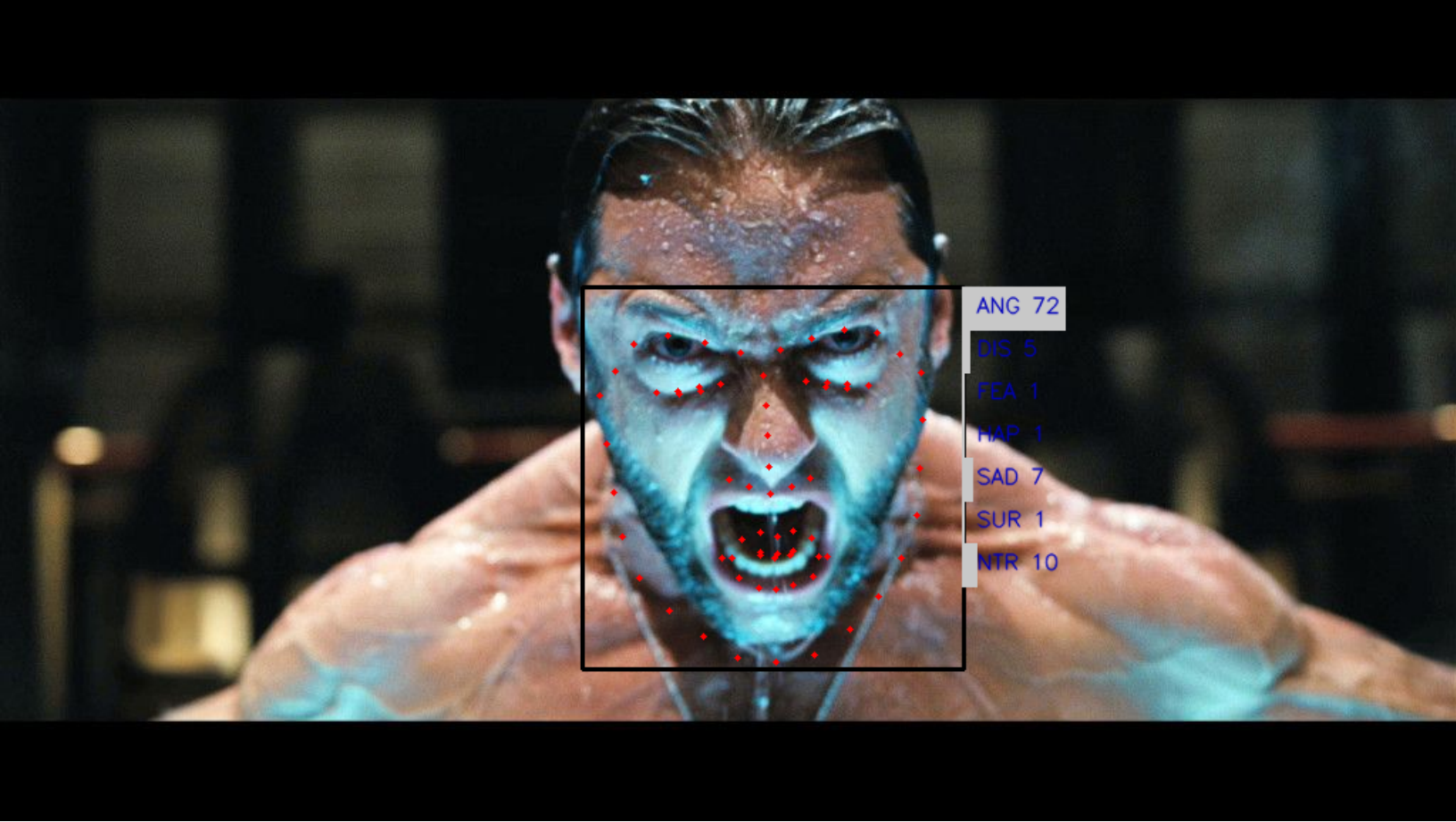

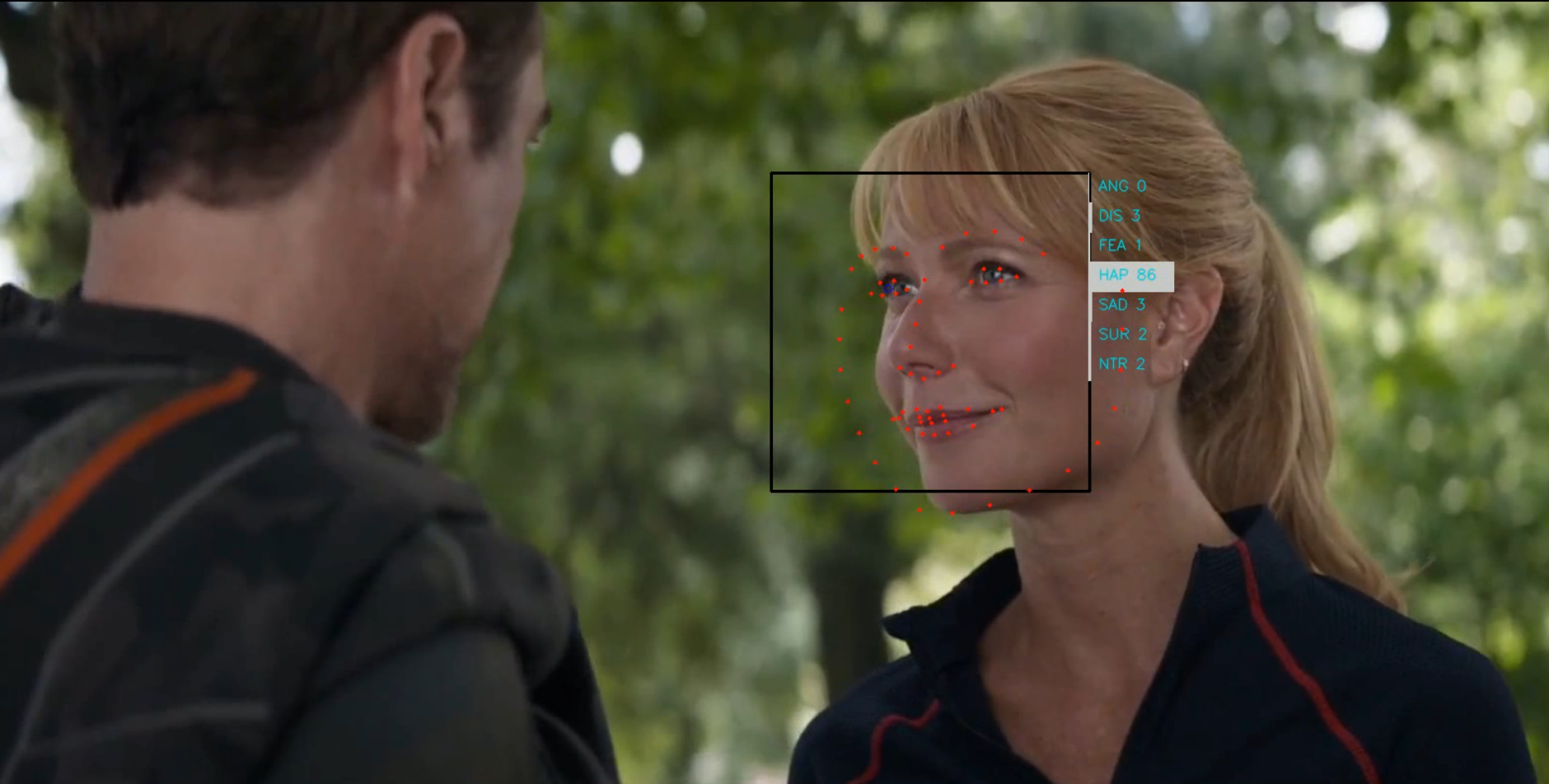

Dlib's frontal face detection algorithm was used to locate faces. The detection frame is shown using the dark frame. The cropped face was preprocessed and then fed into the CNN. The classification model was trained using the Affectnet dataset, with around $360000$ images of emotions in the wild, the best accuracy achieved was $72\%$ which is not bad!

The neural network is a shallow CNN. It is implemented in keras and you better use a GPU for real-time processing. .WIP.

For now, videos being a series of images, each frame is processed by pathos independently but there are now way better ways to do this in litterature.

This is a nice project... I don't have the motivation to update it right now but who knows? I might revisit this in the future with all the new tech that is available 6 years later!